by Jon Chun and Katherine Elkins

Generative AI is a transformative force, reshaping both arts and humanities computing. Its recent evolution retraces our own human evolution, only on a vastly accelerated scale.

In the Beginning Was the Word

Working with language seemed like it would be a simple task for computers. Given how easily machines compute complex math, how hard could it be? The answer turned out to be very.

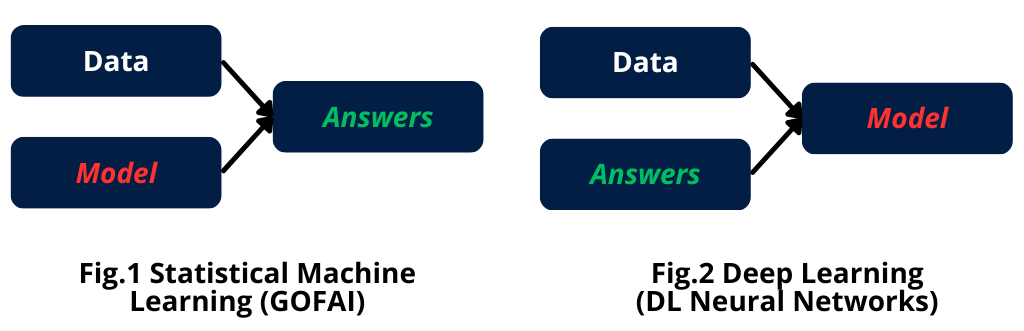

Traditional or Good Old Fashioned AI (GOFAI) focused on statistical patterns that required models carefully crafted by experts. The advent of Deep Learning, powered by advancements like Moore’s Law and big data, enabled a paradigm shift that eliminated the need for explicit rules.

With this new paradigm, some argued these Large Language Models (LLMs) only simulate understanding, much like “stochastic parrots.”

Applied at scale, however, Deep Learning LLMs exhibit complex emergent properties, just as human biology arises from simple DNA. With scale, new capabilities appear, from summarization to theory of mind.

Exploring the World

Could true intelligence arise from a disembodied “brain in a vat”? How much of our world can be captured by the words on which LLMs are trained? It turns out, more than we thought. ChatGPT, for instance, has demonstrated the ability to generate spatial maps.

But to realize general intelligence, AI agents need embodiment to explore unconstrained environments and learn firsthand. This entails multi-modal AI capable of learning through multiple inputs like sight and sound. Today, AI robots are beginning to explore and interact with the world in just this way.

Mastery of Tools

LLMs, like humans, also have intrinsic limitations. Like us, their evolution leverages tool use and creation to overcome these. Current integration with external databases and search engines reduces so-called “hallucinations.” Plug-ins and APIs enable LLMs to write programs, send email, and manipulate complex symbolic models.

Just as animal intelligence exploded after mastering tools, this stage could unlock an exponential takeoff in intelligence. With tools, AI could more easily engineer its own self-improvement and augment its capabilities beyond human comprehension.

AI Gets Creative (and Emotional)

AI now ventures into creative domains: art, music, and writing. Until recently, automation had largely replaced manual labor characterized as dirty and dangerous, difficult and dull.

Generative AI can write and design, analyze and program. Intellectual property lawsuits and Hollywood writers’ strikes are only isolated examples of looming disruptions.

Alongside these creative powers comes emotional intelligence. Emotional Intelligence and AffectiveAI delve into recognizing, modeling, and influencing human emotions, forging a path toward more empathetic AI systems.

Already, our connected devices feed data to hungry AffectiveAI models, and AI may soon know us better than we know ourselves. Once algorithms excel at modeling collective psychology, we may find our minds and behaviors shaped by forces beyond our awareness.

AI as Social Agent Collectives

Just as social collaboration propelled human civilization forward, a society of collaborative AI agents can orchestrate complex tasks with a symphony of specialized skills. Rather than one monolithic model, the latest natural language model, GPT-4, is likely a coordinated mixture of experts.

Some theorists envision a future in which humans directly interface with AI collectives through brain-computer interfaces. More immediate questions involve how to align AI agents with human values.

Conclusion

Addressing the Human-AI value alignment problem is crucial to ensure the AIs we develop are in service to humanity. It’s a pivotal moment for those of us in humanities and arts computing to have a voice in shaping our AI future.

As the articles in this special volume on times of crisis make manifest, part of this influence lies in educating the next generation. There are a variety of interconnected questions that span the range of human experience and organization, and to study any of these in isolation is insufficient.

But we also have a role to play in the coming conversations about the future of work, the limitations we may wish to place on artificial agents, and the ethical, legal and societal questions we now face. To ensure that the AIs we develop are in service to humanity, we must first solve the human-human alignment dilemma. Before we decide on values for our machines, we must first agree, however much we are able, on which values are shared by humans.

We are grateful for journals like the International Journal Humanities and Arts Computing that provide forums to consider these questions. Arts and humanities computing is having a moment, and there is no better time, with the spotlight upon us, to do something with the moment that’s been given us.

About the journal

IJHAC is one of the world’s premier multi-disciplinary, peer-reviewed forums for research on all aspects of arts and humanities computing. The journal focuses both on conceptual and theoretical approaches, as well as case studies and essays demonstrating how advanced information technologies can further scholarly understanding of traditional topics in the arts and humanities.

Sign up for TOC alerts, recommend to your library, learn how to submit an article and subscribe to IJHAC.

About the authors

Jon Chun is co-founder of the world’s first human-centered AI curriculum at Kenyon College, where he also teaches. He is an engineer, computer scientist and former Silicon Valley Startup CEO who has worked in medicine, finance, and network security in the US, Asia, and Latin America. His most recent AI research focuses on NLP, multimodal AffectiveAI, LLMs and XAI/FATE. He also consults for both industry and startups.

Katherine Elkins is Professor of Humanities and faculty in Computing at Kenyon College, where she teaches in the Integrated Program in Humane Studies. She writes about the age-old conversation between philosophy and literature as well as the more recent conversation about AI, language and art. Recent books include The Shapes of Stories with Cambridge University Press, which explores the emotional arc underlying narrative. In addition to articles on authors ranging from Plato and Sappho to Woolf and Kafka, her latest research explores AI ethics and explainability in Large Language Models like GPT4. She consults on AI regulation and fairness, and can also be heard talking about new developments in AI on podcasts like Radio AI and streaming networks like Al Jazeera.