by Laurence Diver

Tell us a bit about your book

Digisprudence is about the technologies that govern our behavior, and how they can be designed in ways that are compatible with democracy. We’ve probably all had that feeling of frustration when using our smartphone or a website, that we’re in some sense being controlled or manipulated in what we are able to do. That might seem unimportant, but technology is powerful: imagine a car that won’t go faster than 70mph, no matter how hard you accelerate (even to avoid an accident or to get someone to the hospital). Or think about the convoluted process you have to go through to delete your Facebook account, especially if you want it to happen immediately (this could have significant implications in the case of doxing or other forms of online harassment).

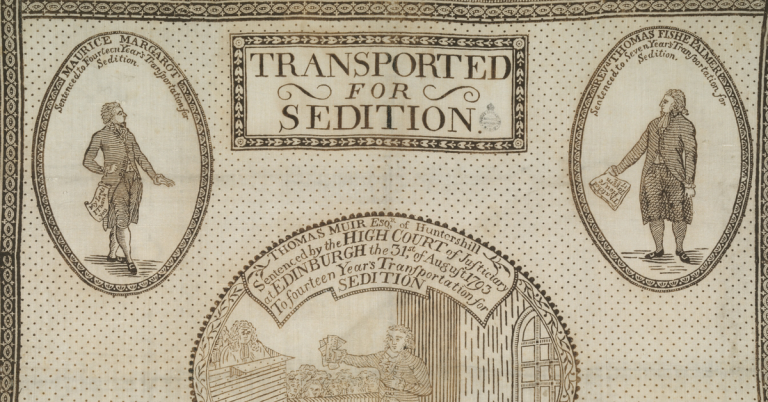

In effect, the choices made by the designer of a system impose rules on you. But those rules are not like legal rules, which you as a citizen are able to interpret the meaning of, or even ignore (think of the speeding example above). Computer code just imposes itself, often without any input from the citizen (‘user’). My argument is that this is a problem in a democracy. We tend to think that parliaments can’t make whatever laws they like – they are constrained in the first place by a constitution, and after the fact by the courts, who can consider whether a rule is legitimate after it is made[1]. I think that, essentially, the same constraints should apply to code: designers and developers shouldn’t be able to make whatever rules they like, if these have an effect on the behaviour and actions of citizens. If we don’t accept arbitrary law, we shouldn’t accept arbitrary code.

Digisprudence charts this problem, setting up a parallel between unacceptable laws and unacceptable code. It then presents a set of design features, or digisprudential affordances, which can help code avoid imposing arbitrary control over its users. These features are about how the system relates to those interacting with it, and to the legal system more widely: choice, transparency (of operation, purpose, and provenance), delay, oversight, and contestability (by the user and via the courts).

Systems that include those features will be compatible with the underlying values of democracy, at least at a foundational level (they will still need to comply with other laws, such as intellectual property, data protection, etc.).

Finally, Digisprudence is a ‘reboot’ for three main reasons.

First, because it takes the idea of code controlling us, a.k.a. ‘code as law’, and builds on it by analysing our immediate interactions and relationships with technology in greater depth than before.

Second, because it uses longstanding theories from law as a platform to underpin and justify the digisprudential affordances.

And third, because it opens the black box of how code is actually made, with a view to making a difference in practice.

What inspired you to research this area?

Although I have an academic background in law, I’ve been tinkering with programming since I was a kid in the late 90s. After undergraduate studies and a spell as a researcher at the Scottish Law Commission, I worked for a few years as a professional web developer. It became clear to me that I had a strange, and perhaps not entirely legitimate power over the interactions between users and the products I was creating. Even with the best of intentions it seemed odd to me that I – like millions of other developers around the world – had this ability to ‘legislate’ design rules that would control part of someone else’s behaviour.

More broadly, like a lot of people I’ve had the sense since the early 2000s – and especially with the rise of Facebook – that there’s something unethical about how technological architectures are so effective at structuring our behaviour and actions. Combined with my own experience as a developer, that was the seed of my interest in this area. Of course, technology ethics has been an academic concern for decades and in the past 20 or so years has become a really significant field. I thought that there was something useful to be said about the crossover between legal theory and technology design, especially given the very important differences between law and ethics.

What was the most exciting thing about this project for you?

I find the strand of legal research that deals in the ‘materiality’ of how law is done really interesting. Law is often quite an abstract field, even when it deals with real-life situations; questions of how rules become reality, through people’s actions or via the architectures that surround us – those are extremely interesting, and relevant not just to legal academics but to all of us as citizens.

How we analyse those questions when those architectures are digital, and created by commercial actors, is fascinating and important from a democratic perspective. It opens up a lot of really exciting and fundamental questions at the cross-over between law and computers.

Has your research in this area changed the way you see the world today?

I’ve come to realise just how often people assume that computers and Artificial Intelligence are essentially good things that can solve the world’s problems. This is the case for many in academia, civil society, and government (both domestic and international). There is a place for these technologies, of course, but the tendency to frame a problem in a way that leads to a technological solution is very common. This is problematic because it leads to the wrong kinds of solutions to the wrong kinds of problem.

In that respect I can say my own trajectory has changed over time: as a technology enthusiast myself, I can certainly identify an evolution in my own views. I have a deeper appreciation for the tension between the ‘solve the problem with the tools that I have’ mindset of the developer, and the ‘are these the correct tools, and is this even the correct problem?’ approach of the philosopher, ethicist, or lawyer. Having a foot in both camps has really helped me to understand how one side views the other.

What’s next for you?

I’m currently a postdoc in an ERC Advanced Grant project called Counting as a Human Being in the Era of Computational Law, or COHUBICOL. One can probably appreciate the overlap between the book and the focus of the project! Our work really deepens the ‘dual view’ I described above: we are a cross-disciplinary team, comprising lawyers and computer scientists. Our focus is on the deep assumptions of both fields, and how they complement and collide with one another. This is especially important when computer systems become more and more embedded in the practice of law, which is something relevant to all of us.

[1] One might recall the Johnson government’s attempt to prorogue the UK Parliament in 2019 – that was found to be illegal by the Supreme Court, and reversed (or more accurately, the court found that the prorogation had never happened in the first place).

Pre-order your copy today

Digisprudence: Code as Law Rebooted

Reboots the debate on ‘code as law’ to present a new cross-disciplinary direction that sheds light on the fundamental issue of software legitimacy

- Reinvigorates the debate at the intersection of legal theory, philosophy of technology, STS and design practice

- Synthesises theories of legitimate legal rulemaking with practical knowledge of code production tools and practice

- Proposes a set of affordances that can legitimise code in line with an ecological view of legality

- Draws on contemporary technologies as case studies, examining blockchain applications and the Internet of Things

About the author

Laurence Diver is a postdoctoral researcher in COHUBICOL (Counting as a Human Being in the Era of Computational Law) as part of the Research Group on Law, Science, Technology and Society at the Free University of Brussels-VUB. Laurence has contributed to a number of journals including SCRIPTed (where he is also Technical Editor), International Review of Law, Computers and Technology and Artificial Intelligence and Law. He is also co-founder of the Journal of Cross-disciplinary Research in Computational Law (CRCL).